- Superslow AI Newsletter

- Posts

- Mixture-of-Experts Explained: Why 8 smaller models are better than 1 gigantic one

Mixture-of-Experts Explained: Why 8 smaller models are better than 1 gigantic one

GPT-4 is rumored to be a Mixture-of-Experts, totaling 1.76T parameters. How does MoE work and why is it so powerful?

Hi everyone 👋

Today we’ll dive into rumors about GPT-4 as a Mixture of Experts (MoE) and what exactly MoE is, this week’s reading list, and cool Midjourney “zoom-out” demos.

Let’s dive in!

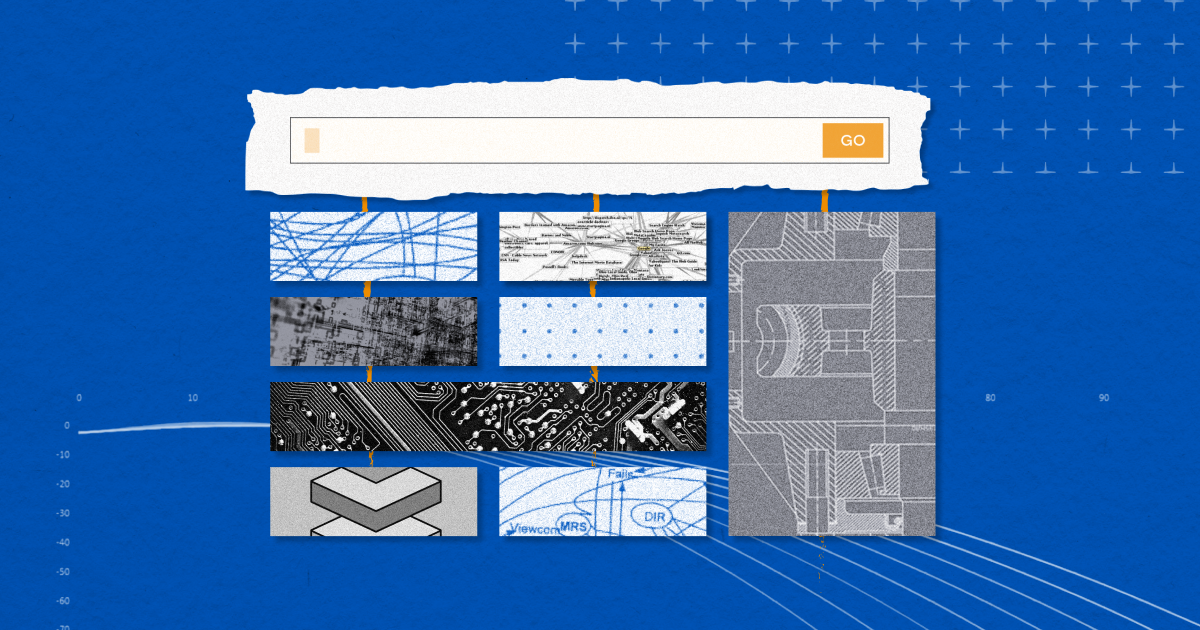

QR Generator: Try this out! It should take you to today’s post.

Thanks for reading! Hit subscribe to stay updated

on the most interesting news in AI.

Loving this content? I’d love for you to spread the word! Here are a few ways you can help me out:

If you use one of the above links, I’ll send you my free list of top AI newsletters I read to stay on top of AI!

Now let’s get to the today’s post 👇

📚 Concepts & Learning

Today we’re diving into a mixture of experts (MoE). It’s rumored that GPT-4 is powered by 8 expert models which are each 220B parameters. How does it work and why is it (rumored to be) used in GPT-4? Let’s dive in.

For some quick background on the the GPT-4 rumors, here’s some internet context:

AI Twitter woke up this week to a new rumor about GPT-4. The sources are somewhat trustable and the info is plausible. Summarized in a sentence: GPT-4’s secret has been revealed—it’s not one model, but many. Here's the whole story:

If we believe the rumors, first leaked by George Hotz, GPT-4 isn't some breakthrough >1T monolithic architecture but an 8×220B mixture of experts (MoE) model. So, 8 smaller models tied together using a technique first implemented by Google in 2021.

GPT-4 may not be the technical and scientific achievement we thought it was. The news was reaffirmed by Soumith Chintala, cofounder of PyTorch at Meta, who implied the info was rather well-known in the inner circles of OpenAI.

They gave explicit claims but aren’t direct sources (not OpenAI staff). However, Mikhail Parakhin, Bing AI lead (as close as you can get to GPT-4 without working at OpenAI) said this the day before, hinting at the same idea: GPT-4 is not a model but several.

None of this takes any merit from GPT-4’s performance—it’s, after all, the best LM in existence—but it’s just… underwhelming. Not what people were expecting after a 3-year wait. The leading AI lab in the world dusting a 2-year-old technique raises questions about AI’s true state-of-the-art and the rate of progress.

Yet what I see looking back is that OpenAI masterfully executed their ploy—and they won big in the business and marketing dimensions:

- By hiding the details of the model, they created a lot of mystery around GPT-4, fueling speculation and hopes for an unpredictable—but surely amazing—future.

- They made it seem GPT-4 was so good, so unique, they had to conceal the technical specs from competitors like Google or open-source due to business pressures.

- They then had free rein to capture the conversation with their preferred narrative (AGI/superintelligence) and convince governments of the need for new regulatory agencies (that would regulate only the aspects they deemed more urgent).

- And, above all, they hid the fact that GPT-4 is actually a clever remix of a 2-year-old technique that Google implemented first. (OpenAI, just recently, hired the key people from Google that worked on MoEs.)

Why is this important for the future of AI?

First: scale, compute, and data weren’t the key to GPT-4 (as in what sets it apart from other models like Bard or Claude). Engineering ingenuity is. Sadly, scientific breakthroughs can’t be bought. Eureka moments can't be forced.

Second: MoE is an “old” technique. If the industry has to go back and dust off old ideas, maybe is a sign they’ve run out. It may imply we're not really going that fast milestone after milestone as companies, media, marketers, and arXiv make it seem.

Here are a few of the Twitter threads floating around on the topic from credible researchers:

@latentspacepod@realGeorgeHotz GPT4 is 8 x 220B params = 1.7 Trillion params

ok I wasn't sure how widely to spread the rumors on GPT-4 but it seems Soumith is also confirming the same so here's the quick clip!

so yes, GPT4 is technically 10x the size of GPT3, and all the small… twitter.com/i/web/status/1…

— swyx.ai (@swyx)

9:44 PM • Jun 20, 2023

Here’s the tweet by Soumith Chintala, co-founder of PyTorch at Meta:

i might have heard the same 😃 -- I guess info like this is passed around but no one wants to say it out loud.

GPT-4: 8 x 220B experts trained with different data/task distributions and 16-iter inference.

Glad that Geohot said it out loud.Though, at this point, GPT-4 is… twitter.com/i/web/status/1…

— Soumith Chintala (@soumithchintala)

9:21 PM • Jun 20, 2023

The rumor is: GPT-4 is a 1.76T parameter model dispersed across 8 expert models, each 220B parameters. That means each individual model (or expert) is still larger than GPT-3 (at 175B parameters). And all 8 expert models combined together produce a very large joint model.

Okay so how does a mixture of experts work, and why would we use it?

Mixture of experts is a model architecture that combines multiple “expert” models to generate outputs. Different subsets of parameters are considered “experts” in their particular domain(s), and they work together to produce the final output. Each expert has its own strength which it can specialize in and become very good at.

But does it work better than a generally intelligent single (aka dense) model?

In today’s post we’ll explore that question, but the short answer seems to be: yes! More than improved performance, this MoE method also allows you to do similar computations with smaller compute budgets.

In this post, we’ll focus mainly on large language models although MoE can be applied to image models or multi-modal models as well.

Some background

Throughout this post there will be reference to dense vs sparse models.

Dense models refer to a model that uses all of its parameters for every computation. Dense models are the typical, vanilla style you’d think of with LLMs.

Sparse models are models where only a portion of the total number of parameters is activated (lights up). This is related to the mixture of expert architectures that we’ll dive into today.

Here’s an example:

Imagine you have a language translation model. It knows 5 different languages: English, Spanish, French, German and Italian.

If you want to translate ‘hola’ into English, you’d have to ask the whole model what ‘hola’ means, rather than just the parts of the model that speak Spanish:

Dense model

Input: What is ‘hola’ in English?

- English: I don’t know.

- Spanish: Hello.

- French: I don’t know.

- German: I don’t know.

- Italian: I don’t know.

Final answer: Hello.

It’s pretty inefficient to ask 4 different language models that don’t speak Spanish what a Spanish word means. So what if we could separate each expert?

Imagine you can separate the models into 5 expert models. Each of them has to learn basic grammar of their own language (which is context/knowledge they might have shared when they were 1 model), but now we only need to query one expert model when translating Spanish words.

Sparse model

Input: What is ‘hola’ in English?

- Classifier: I understand ‘hola’ is a Spanish word. Let’s ask our Spanish expert.

- English: N/A

- Spanish: ‘Hola’ means ‘Hello’.

- French: N/A

- German: N/A

- Italian: N/A

Final answer: Hello.

Not only do you get your answer faster, but you’ve saved a bunch of compute time and costs. Instead of doing a bunch of calculations across all the experts, you’ve only had to do calculations on 1/5 of the experts.

Cool, what kind of experts can you have?

You might expect to have expert specialization in higher level categorizations, like different languages, or objects like automobiles vs food items. But it seems that the most effective ways of categorizing language seem to happen at a much more abstract layer of detail, including verbs vs punctuation vs numbers.

Why would we use sparse models?

When building a highly capable LLM, two of the main factors to take into account are: model capabilities and cost. One way to think about capabilities is the performance of the outputs:

Does it get the user the desired output?

Does it perform the desired function?

How well does it do that?

One way to think about cost is to consider compute:

One way to think about cost is to consider compute:

How much does it cost to train the model?

How much does it cost to do inference (get an output)?

And then of course, what is the trade off of capabilities vs compute? How much more am I willing to spend to get a more capable model/better output?

Historically, there’s been a correlation been a correlation with amount of data, model size and performance of the model:

Sparse models allow you to scale model sizes without just increasing the parameter count. Scaling the total model size means you can scale the model capabilities. By scaling via a mixture of experts (MoE) you can increase the capabilities while keeping your compute budget fixed. You do that by increasing the number of expert models in your architecture. The intention is then to keep the compute costs fixed for each input while increasing capabilities.

How do we pick which experts in the model to use?

MoE begins with a set of experts who have their own specializations. Then a router function decides which tokens should be routed to which experts. There are a few common ways of deciding which expert model to pass a token onto:

Top-k method

Top-p method

Hash routing

BASE routing

Reinforcement Learning routing

Expert choice routing

Source: Top-k Method

Top-k method

The above graphic denotes the top-k method, which has been common in prior MoE research. You can read about top-k here. FFN in the graphic above refers to Feed-Forward Network. You can read about FFN here.

Expert choice routing

Another method of expert routing is called expert-choice routing, which you can dive into here. Expert-choice routing helps prevent over-specialization and under-utilization of particular experts in the model. Here’s a quick graphic on that method:

Source: Expert Choice Routing

Using expert-choice routing has shown significant improvements in capabilities compared to top-k methods. You can read more about that in this Google blog here.

If you’d like a deeper dive on the other expert routing methods, there is a great survey on page 9 here.

Okay, what other aspect of MoE should I consider?

Important hyperparameters to consider

The number of expert models

The size of each expert model

How many layers of expert models there are

GPT-4 is rumored to have 8 experts, each at 220B parameters.

So what is the optimal number of experts?

It depends.

Ultimately, it depends on the application (vision vs language) and on the specifications of your hardware system. There’s more research on this topic by Shazeer et al. 2017 that shows strong pre-training results in a high optimal number of experts, while other models perform best with fewer and larger expert models in Du et al., 2021 and Zoph et al., 2022.

Check out this graphic on the number of experts and their respective performance (taken from the appendix of the 2022 GLaM paper):

There seems to be peak performance somewhere between 64 to 256 experts. Interestingly, for some of these methods/models performance tapers off as more experts are added. This backs up other research that suggests there is an optimal maximum range of experts — more doesn’t always mean better.

This may be confounded by the compute budget or dataset size, so it’ll be interesting to see more research in the future go into how the optimal number of experts relates to compute budget and dataset size. Would love to see a Chinchilla-style paper for expert-optimal models.

Even more interesting (again) is that GPT-4 is rumored to only be 8 experts, but 8 very large ones at 220B. Each expert is larger than GPT-3.5 alone. And its performance is currently state-of-the-art.

Results

Here’s another example of sparse models outperforming dense models, holding compute budget and prompting method fixed:

In the research on Big-Bench tasks by Srivastava et al., 2022, holding compute budgets fixed, sparse models outperformed dense models. You can see the sparse models represented by the dotted lines, and the dense models in the solid lines. There are three methods using zero-, one-, and few-shot prompting, which gives them zero, one, or a few examples respectively in each prompt. Comparatively, the sparse models perform better than their dense counterparts holding compute budget and prompting method fixed.

Calibration tests on dense vs sparse models

The same research by Srivastava et al., 2022 found that sparse models outperformed dense models on multiple choice BIG-Bench tasks, with the aim to measure model calibration.

What does calibration measure?

Calibration measures how well the model understands the true probability of events. It measures the difference between the real probability of events and the model’s prediction of the probability of events. Calibration is important for language models because it reflects how reliable and trustworthy they are.

One key finding showed that sparse models matched the calibration of dense models using 10x more compute. That means sparse models can have comparative performance to dense models on a smaller compute budget.

In the above graph, you can see the sparse models represented by the dotted-lines and the dense models represented by solid lines. Lower calibration error is better.

Limitations

Compute

One thing to note is that sparsity works well when you have lots of compute available on many accelerators (GPU/TPU).

Typically models are trained using data-parallelism where different machines will get different slices of the training/inference data. The machines used for operating on the different slices of data can now be used to host many more model parameters. Therefore, sparse models are good when training with data parallelism and/or have high throughput while serving: training/serving on many machines which can host all of the parameters.

Sparse models are a great fit when you have the ability to either be training or serving on many machines in parallel in order to host the additional model parameters from the experts. But if you have a fixed or small compute budget, dense models might be a better fit. This is important to note for any indie-hackers or builders who want to fine-tune open source models, or build their own models in-house. Sparsity works best if you have a lot of compute resources.

Overtraining and under-training

A key component of MoE architecture is the routing method. How do we route the right tokens or pieces of information to the right expert models? If we don’t do that well then certain expert models may be over-specialized and under-utilized, leaving a majority of the work to other experts. This defeats the purpose of “division of labor” (to steal a term from Adam Smith’s economic theory).

Lagging in fine-tuned settings

Looking to fine-tune GPT-4 in the future? If GPT-4 is a mixture-of-experts, one thing to note is that MoE models, especially at larger scales, tend to lag compared to their dense counterparts. This is because MoE models are slower at transferring knowledge (sharing knowledge) to new domains.

Perhaps dense, open-source models will have an opportunity here due to this finding. Food for thought!

Worse at reasoning, better on knowledge-heavy tasks

Research has demonstrated that, after fine-turning, sparse models performed worse on reasoning tasks. They did, however, perform better on knowledge-heavy tasks. This includes research on out-of-domain LM performance, and tasks in HellaSwag, PIQA, and Winogrande.

Conclusion

Sparsity reduces the training and inference costs, resulting in massive models with a better accuracy than their dense counterparts. But many open questions remain. For instance, we still poorly understand how the optimal number and size of experts depends on the task (e.g. should one use a few large experts or many small experts for translation?). As many works have pointed out, achieving strong out-of-domain generalization is less straight-forward and better explanations are needed. Further, most sparse expert models have relatively low architectural diversity where sparse layers are interspersed at regular intervals. Future models may benefit from less standardized structure and heterogeneous expert architectures. Additionally, the appropriate granularity of sparsity still must be determined: most works have focused on experts replacing components, such as feed-forward network layers, but benefits of more fully modular, independent experts were discovered (Gururangan et al., 2021; Li et al., 2022).

Okay, so what’s an example of this kind of MoE model? Aside from (maybe) GPT-4?

One model that researchers used as a foundation for experimenting with MoE methods is Google Brain’s GLaM model.

Enjoying this post?

Feel free to share with any

AI curious friends. Cheers!

What is GLaM?

What is GLaM?

- Sparsely activated mixture-of-experts (decoder-only) architecture to scale the model capacity with less training cost compared to dense variants

- Largest GLaM: 1.2T parameters

- ~7x larger than GPT-3

- 64 experts per MoE layer

- Each token activates 96.6B (8%) parameters

- Consumes 1/3 of the energy used to train GPT-3

- Requires 1/2 of the compute budget (FLOPs) for inference

- Achieves better overall zero-shot, one-shot and few-shot performance across 21 Natural Language Understanding (NLU) and 8 Natural Language Generation (NLG) tasks

The big headlines here are:

The largest model is 1.2T (smaller than the rumored 1.76T GPT-4)

Only 8% of the parameters activate on average per query! (Sounds a lot like how we only use 10% of our brain at any given time…? Something something neuroscience something neural networks 🧠)

It uses 1/2 the compute per item to generate outputs than GPT-3

It performs better than GPT-3

Here we can see the relative performance of GLaM compared to GPT-3. This paper was published in August 2022, so GPT-4 hadn’t yet come out. On average accuracy, GLaM outperforms the dense GPT-3 model, and uses ~50-65% less compute than GPT-3. Pretty amazing! Improved performance on a smaller budget.

Two conclusions:

- GLaM can be trained with significantly less data than dense models to reach similar zero-shot and one-shot performance

- If the same amount of data is used, sparsely activated models perform significantly better

In the 4 graphs below, the green solid lines correspond to the sparse mixture-of-experts models, one that learns by zero-shot (no examples), one-shot (one example), and few-shot (a few examples). The redish-orange dotted lines represent the dense (non-MoE) models in zero-, one- and few-shot as well. As you can imagine, having one example to learn from is better than having zero examples, and having a few examples is even better than having one example. Having an example improves model performance because it has more information on what a desirable outcome looks like.

From these graphs, it’s clear that sparse MoE models comparatively perform better than their dense counterparts.

Results of GLaM

These graphs compare the performance of GPT-3 to GLaM with 64 expert models, each 64B parameters. The green bars represent where GLaM outperformed GPT-3 and by how much. The orange bars denote where GLaM underperformed compared to GPT-3 and by how much.

Impressively, GLaM achieved this level of performance on around 1/3 of the compute budget of GPT-3. The left graph shows the per inference (output) cost, which shows that GLaM can generate an output on 50% less compute than GPT-3. The right graph shows the training costs, which demonstrate that GLaM was trained on 1/3 of the compute budget of GPT-3.

The upshot

GLaM performs better on the majority of natural language understanding and generation tasks compared to GPT-3, and did it on a smaller compute budget. GLaM saves on costs both at training time and deployment time.

What other models are examples of MoE architecture?

The Switch Transformer model is another well known one.

Continue reading

I recommend all 4 of these resources if you want a deeper dive:

Other reading

Google blog on GLaM (Link)

Google blog on MoE (Link)

The Sparsely-Gated MoE, Shazeer, Hinton, Dean (Link)

Switch Transformers: Scaling to Trillion Parameter Models (Zoph, Fedus, and Shazeer of Character.ai) (Link)

Stable and Transferable Sparse Expert Models (Link)

Parallel Training of Expert Language Models (Link)

What do you think of MoE? Is there other research you’d recommend checking out? Drop me a line or reply here if so!

Loving this content? I’d love for you to spread the word! Here are a few ways you can help me out:

If you use one of the above links, I’ll send you my free list of top AI newsletters I read to stay on top of AI!

🗞️ News

What’s on my reading list?

🎁 Miscellaneous

You can make a video like this in like 5 min.

Just do a custom zoom out in Midjourney. Set to --zoom 1.25 (zoom out 1.5x will work too, but wont be as smooth)

Save your images (in order) upload them to @runwayml frame interpolation tool. Click generate.

That's it

— Nick St. Pierre (@nickfloats)

9:50 PM • Jun 25, 2023

That’s it! Have a great day and see you next week! 👋

What did you think about today’s newsletter? Send me a DM on Twitter @barralexandra or reply to this email!

Thanks for reading Superfast AI.

If you enjoyed this post, feel free to

share it with any AI-curious friends. Cheers!