- Superfast AI Newsletter

- Posts

- Synthetic vs Real Data: Why do models perform worse when trained on synthetic data?

Synthetic vs Real Data: Why do models perform worse when trained on synthetic data?

Models suffer from catastrophic forgetting and data poisoning when trained on synthetic data, new research shows.

Hi everyone 👋

Happy 4th of July to anyone that’s celebrating! I hear it’s raining on the East Coast, so hopefully the fireworks and festivities get rescheduled sometime soon. I’ve always loved celebrations of the 4th: backyard BBQs, cold drinks, popsicles, and of course firework endings 🙂 what’s not to love!

In the meantime, onto today’s post! Today we’ll dive into synthetic vs real data, this week’s reading list, some fun Midjourney-created Barbie remixes.

Let’s dive in!

DALL-E on Sam Altman in Barbie’s World

Thanks for reading! Hit subscribe to stay updated

on the most interesting news in AI.

📚 Concepts & Learning

Today we’re going to talk about training models on synthetic vs real data. What does the process look like to train on synthetic data, and how do models trained on synthetic vs real data perform? In this post, real data usually refers to human-generated data, but it can also refer to real-world data like business data coming from company data lakes and other places like that. It can also come from blogs, news articles, forums like Reddit, social media platforms like Twitter, and more. The idea is that this data originated from humans or some human-derived action.

In contrast, synthetic data refers to model generated data. For example, let’s say you want to generate a random number between 1 and 10. Instead of asking your office mate to come up with a number (that would be human generated), you use the random number function in Excel to pick a number. Of course, synthetic data can get a lot more complicated than that. We’ll get into some examples in this post today.

To motivate this discussion today, one of the key issues researchers are concerned about is the increasing presence of AI generated text online. If we’re scraping Reddit, Twitter, Wikipedia and more web data to train language models today, will we continue to do that in the future? And if so, what are the implications of a significant (and increasing) portion of that data being AI generated on the quality of future models we train?

But before we dive in fully, let’s talk about how data distributes over a bell curve. What’s important here is that good answers are distributed over a bell curve, some of them being more probable and others being less probable.

Here’s an example:

What sandwich should I eat today?

Imagine you have an input and you ask an LLM chatbot like ChatGPT to give you an answer:

Give me some suggestions for a sandwich I can make at home.

Certain responses will be very popular and reasonable responses:

- Ham and cheese

- PB&J

- Turkey Club

- etc.

Some answers will be less popular, but probably still reasonable. These answers we would consider 1+ standard deviation(s) away from the peak:

- Peanut butter and fluff (a New England favorite for any fans out there 💛)

- Cheese and Vegemite (if you’re a fan of Vegemite, I want to be friends)

- etc.

But some toppings with be at to the tails of the distribution — possible but very unlikely:

- Mayo and pickles

- Vaseline toastie (reference song for a fun throwback)

- Honey and dirt

- etc.

The key concept to pull out here is that some answers are at the tails of the distribution and others are closer to peak. Answers at the peak indicate which items are most frequently seen in the LLM’s training dataset.

Check out this post for some background on RLHF and Supervised Training to get some insights into how LLMs are trained.

If you sample from a real human-created dataset, you might find (and be surprised to find!) honey-and-dirt sandwiches included in the labeled dataset. Granted, honey-and-dirt might only appear 0.0001% of the time (1 in a million), but it’s still there. This can happen when you pre-train your models on data scraped from the web, especially if you use potentially unfiltered sources like Reddit or Twitter for your language data. The good thing is, since honey-and-dirt represents 0.0001% of your dataset, in theory you’d have to sample 1 million sandwich ideas before getting honey-and-dirt back.

In today’s post, we’ll talk about synthetic data, or model generated datasets. There are two tensions to discuss:

What happens when statistically relevant data is under-represented in your generated dataset?

What happens when statistically relevant data is over-represented in your generated dataset?

Let’s breakdown both ideas:

Imagine you want to train a model on generated data. You asked 100 humans what sandwich ideas they wanted to contribute to the training of this model, and they gave answers in the following distribution:

35 Ham & Cheese

35 PB&J

5 PB & Fluff

5 Vegemite and Cheese

1 Vaseline Toastie

1 Honey-and-Dirt

18 distributed over other sandwiches

The best answer is Ham & Cheese or PB&J. But for Boston users, they might once in a while prefer to be given PB & Fluff as an answer. Luckily, the model will return PB & Fluff 5% of the time.

If this model generates a dataset to train the next iteration of the model, it might focus on the highest probability responses because the other sandwich ideas are just sampled at lower rates. This might change the distribution of sandwiches to:

40 Ham & Cheese

40 PB&J

7 PB & Fluff

7 Vegemite and Cheese

0 Vaseline Toastie

0 Honey-and-Dirt

6 distributed over other sandwiches

The Boston users are still happy because PB & Fluff appears 7% of the time, and they don’t miss the Honey-and-Dirt recipes.

Over-representation:

But the California users are upset. Why am I getting this thing called “Fluff” 7% of the time. That’s a crazy sandwich idea! And why am I getting Ham & Cheese as an idea almost half of the time!

This is a case of over-representation. Nobody wants the same Ham and Cheese sandwich every day. So giving the model the ability to return diverse answers is important.

The process repeats again: to create the training dataset for the next model, the current model might focus on the highest probability responses and change the distribution of sandwiches to:

50 Ham & Cheese

50 PB&J

0 PB & Fluff

0 Vegemite and Cheese

0 Vaseline Toastie

0 Honey-and-Dirt

0 distributed over other sandwiches

Now not only is there an over-representation problem, there’s also an under-representation problem.

Under-representation:

Now users in California no longer get PB & Fluff sandwiches (great), but that means no one else gets them either, including the Boston fans. This makes the sandwich idea model very boring, and encourages users from both coasts to return to Google for recipe ideas rather than use this LLM chatbot.

But wait, there’s another case of over-representation and under-representation:

In short, imagine that honey-and-dirt, by random sampling, did make it through to the second generation of the model. Now it might be over-represented compared to the real, original data, and might continue to be over-represented in future generations. This will come at a cost of other sandwiches, like PB & Fluff being under-represented compared to the real, original data.

In these toy examples, over/under-representation might not seem like a big deal, but for practical applications, diversity is great. Imagine if ChatGPT could only ever come up with one template or standard cover letter/resume. That wouldn’t be helpful for most people, and it’d probably be pretty boring.

Or in other applications, it might constrain the ability for models to be creative and come up with interesting poems, prose and jokes. It would definitely make AI rap battles much more boring.

Here’s a visualization of what it would look like to bias towards the most popular responses.

Generation 0 is the initial distribution of data.

As you can see, Generation 9 has the highest peak around 0, and the shortest tails.

So what are the issues at hand?

Synthetic data never perfectly represents real data and any generated data is merely an approximation of the right patterns in the real data

In the process of producing synthetic data that is like real data but not actually real data, biases or inaccuracies can be enhanced in the subsequent model and datasets

Overall, synthetic data might suffer from quality issues. Synthetic data can also be noisy, missing values, or have inconsistencies that lead to lower quality data

What is model collapse?

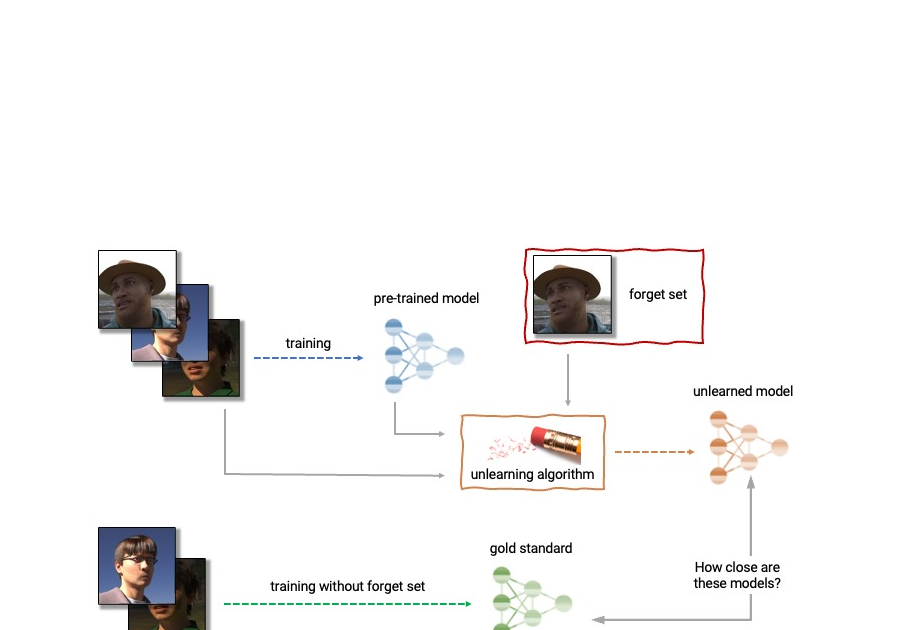

Model collapse occurs when pre-trained models are fine-tuned on AI generated datasets and when the small inaccuracies and biases in the model generated datasets compound over time. These inaccuracies compound when earlier models “pollute” the training datasets of future generations of models. This paper covers two issues that arise from model collapse:

Catastrophic forgetting: This occurs when models forget prior information that they’ve learned from previous training rounds when they are presented with new information or data.

Data poisoning: This occurs when models learn from bad or over-represented data and think bad data is more representative of desirable output than it actually is. This happens generally when there’s bad data in the initial dataset that isn’t parsed out in advance of training.

Data poisoning via inaccurate/ unrepresentative data sampling

Imagine the honey-and-dirt sandwich was 1 in a million in the original dataset. Let’s say in a random sample of 10,000 sandwich recipes, honey-and-dirt makes it through to the next dataset. Now it represents 1 in 10,000 recipes to sample from. Let’s say in the next round you sample 1,000 recipes, and by statistical chance the honey-and-dirt recipe makes it through again. Now the recipe is 1 in 1,000. Let’s say you’re 1 in 1,000 people to ask a LLM chatbot (like ChatGPT) what sandwich you should have for lunch. Let’s say you specifically ask the LLM to give you a creative recipe, so you turn the ‘temperature’ up on your query. Well, the LLM could return ham & cheese which represents 50% of the dataset, or it could give the creative (or riskier) answer closer to the tails. As a general practice, the LLM tries to provide you with ‘creative’ answers that still fall within the bounds of 1 in 1,000 chances as likely to be a good answer. How about a honey-and-dirt sandy? 🙂

This process can poison the last dataset in a training sequence with an over-representation of bad data points that would be much more statistically rare if sampled from the original dataset.

How does the generated data sampling process work?

You can see an example of the generated dataset and sample process here:

What kinds of errors lead to model collapse?

Statistical approximation error

This is essentially errors that arise from rounding probabilities.

If your model learns the statistical relevance of outputs based on rounded probabilities (e.g. a honey-and-dirt sandwich is 0.0001% likely), the model will round up or round down the importance of outputs in ways that don’t reflect reality (or your desired output). This can lead to cutoffs that are overly harsh or overly permissive and could skew the next generated dataset.

This would be solved if you could sample infinite examples.

Functional approximation error

This occurs when your model estimates the relationship between input and output and produces predictions about inputs that don’t actually exist.

What does that mean? Imagine you have a classification model that predicts the relationship between a person’s height and their gender. Great, now for a specific input (height) you can predict the probable gender (output). But this model also predicts what gender someone who is 10 feet tall would be. Except no one is 10 feet tall, so the model now comes up with predictions for inputs that wouldn’t be in the original, real, human-generated dataset.

How did the researchers test this?

They ran three experiments, a Gaussian Mixture Model (GMM) test, a Variational Autoencoder (VAE) test and a LLM test.

Gaussian Mixture Model (GMM)

The test: The model is tasked with generating data points from two artificially generated Gaussian models that match the distribution of the real, original dataset. Here’s what the original data looked like and the first generation attempt by the model:

It does a pretty good job on the first try! The distribution of purple/yellow dots are in generally the same area as they are in the real dataset. And the frontier that divides the yellow and purple dots looks pretty similar as well.

Okay what about later iterations?

Yikes! The model performance degrades pretty quickly, and in surprisingly unpatterned ways. There is no consistent frontier separating purple from yellow dots, nor are the clusters of similar size or location. You can see which iteration each graph represents by the number at the top center of each.

The results: By iteration 50, the model mis-perceives the original, real data. By iteration 2000, there is very little variation remaining in the data as outputs near the mean are given a higher probability.

Variational Autoencoder (VAE):

The test: The model is tasked with generating images of hand-written numbers.

The results: Here’s a visual example of how vision model degrades as it’s trained on further downstream generated datasets:

The Large Language Model (LLM): OPT-125M from Meta

The test: Two models were fine-tuned:

The first was fine-tuned and tested 5 times with no sampling from the real dataset

The second was fine-tuned and tested 10 times with sampling from the real dataset. Each time the model was fine-tuned, it 10% of the data is sampled from the real dataset

The researchers then tested performance based on the perplexity of the model. Essentially, perplexity is a measure of how surprised the model is when it sees the real answer. It measures the difference between the real answer vs what the model predicted the right answer would be.

Perplexity test

Let’s say you run this test:

User Input: What is the capital of France?

Model: I think it’s Cannes (à la the film festival)

User Eval: No, it’s Paris.

Model: Wow, I did not expect that based on how many IG influencers talked about Cannes this summer!

Perplexity score: 65% — higher scores means higher confusion, so they are worse

The results: Models trained with a 10% sample of real data had lower perplexity scores, meaning they performed better overall.

Note the difference in the y-axis between the two graphs. The left graph demonstrates the tests run with no real data preserved, and the right graph demonstrates the tests run with 10% data preserved.

One interesting outcome of this test is that models with a 10% sample of real data seem to have lower perplexity scores on average (lower is better — lower means less confused/surprised) and the distribution of perplexity cluster more in the 10% real data sampled cases than in the 0% real data sampled cases (see blue vs brown in both graphs).

An outcome of this insight might be that future training should always include some sample of real/human-generated data to combat issues in model collapse. Perhaps there is an optimistic path forward that simply requires some human-labeled data, rather than all or nothing synthetic vs real data.

The upshot

It’s possible that we will be in a world where synthetic data trains the next level of ML models. And these researchers have shown in theory and in three experiments that training on synthetic data can result in worse performing models.

But one factor that isn’t addressed by this research is: much of what’s written online or published by humans may be LLM-assisted, but it’s often still reviewed, edited and filtered by humans.

More than that, if the claim is that ML models will over-bias towards less risky answers over time, I think this is combated by human preferences. How? Let me give an example:

If I ask ChatGPT to create a cover letter for me for a job I’m excited about, it will give me a pretty standard template. Pretty good, but very boring.

So instead, I’ll give ChatGPT more information about myself to nudge it to create a more custom cover letter with unique facts and accomplishments only I have.

Now the answer is a lot more creative and non-standard. So when ChatGPTv2 is trained on my cover letter, it won’t be trained on a standard template, but rather one that includes unique additions provided and edited by me, a human.

I think this is true of Twitter comments, LinkedIn posts, AI generated gift inspo, birthday card content, and much more.

In short, I think this research underestimates the amount of editorializing humans will do before they post LLM-assisted text online. Or maybe that question is simply out-of-scope and this is an interesting stand alone paper for what it contributes to the ML training discussions. I’m happy to stand by the latter :)

The final upshot of the research: data produced by humans will become even more valuable in the future so we continue to have realistic distributions of tail data points.

Want to keep reading?

Now, onto the news and fun 👇

🗞️ News

A great roundup of AI news

🎁 Miscellaneous

Bing solves CAPTCHA

Bing cracks CAPTCHA, then backpedals:

— AI Breakfast (@AiBreakfast)

8:52 AM • Jun 23, 2023

It’s a Barbie world

In honor of the upcoming Barbie movie and the crazy (amazing) marketing campaigns they’ve been running:

But it’s actually NVIDIA’s world

That’s it! Have a great day and see you next week! 👋

What did you think about today’s newsletter? Send me a DM on Twitter @barralexandra or reply to this email!

Thanks for reading Superfast AI.

If you enjoyed this post, feel free to

share it with any AI-curious friends. Cheers!